Lab 3

Lab Goals

Integrate all components previously worked on in labs 1-2 and milestones 1-2. This consists of creating a robot that:

- Starts on a 660 Hz tone

- Navigates a small test maze autonomously

- Sends maze information it discovers wirelessly to a base station

- Displays the updates on the base station (used for debugging)

Prelab

- Discuss method to encode maze information

- Kenneth designed Maze class and software hierarchy

- Final maze has the following specifications:

- 9 × 9 squares

- Each square can be explored or unexplored

- Each square can have walls on either and all sides

- Each square can have treasures (with three shapes and two colors

- Each square can potentially have other robots or decoys

- Review and install the RF24 Arduino Library

- Review and instal the TA designed graphical user interface (GUI)

Sub-Teams

To begin, we split into two groups of two. Each group progressed through the lab individually, as described below.

- Robot Integration Team = Brian and Kenneth

- Radio Team = Eric and Tyler

- NOTE - due to the complexity and interconnectedness of the functions in this lab, we frequently came together as one large group to track progress and talk through issues

Radio Team

GOAL: send maze information wirelessly between Arduino’s

Materials Used

- 2 × Arduino Uno

- 2 × Nordic nRF24L01+ transceivers

- 16 × Female-to-Male jumper wire (instead of the radio breakout board with header)

- NOTE - we crimped these wire connectors ourselves

Getting Started

- Plug the 2 radios into our 2 Arduinos using the female-to-male jumper wires we constructed

- IMPORTANT - the radios use 3.3V, NOT 5V

- Initially, we powered the radios using the Bench Power Supply

- This allowed us to observe the current draw

- We used this to test to make sure our hardware was not broken

- Once we got our system working, we transitioned to using the 3.3V pin on the Arduino

- Instead of using the recommended RF24 Arduino Library, we used a newer, optimized version

- Recommended --> RF24 Arduino Library (last updated 5 years ago)

- What we used --> Optimized fork of nRF24L01 for Arduino (currently being updated)

- Documentation and Class Reference page: LINK

- To begin, we used the "GettingStarted" example sketche

- We changed the address identifier numbers for our system as specified in the lab instructions

- Formula: 2(3D+N)+X

- D (day of lab) = 4 (Thursday)

- N (team number) = 21

- X (radio designator) = 0 for one radio, 1 for the other radio (need two identifiers)

- Channel Numbers = 110 and 111 in decimal = 0x6E and 0x6F in hex

const uint64_t addresses[][2] = {0x000000006ELL, 0x000000006FLL};

Testing the Radios

- We programmed the "GettingStarted" sketch onto both Arduinos

- Allowed us to open a serial monitor for each radio simultaneously

- Initial Issues:

- Broken hardware

- Broken female-to-male jumper wire (no connectivity)

- After addressing these issues, we were able to experiment with the radios

- We cycled through the power level settings to test the range

- Minimum of 15 feet range is needed for the final competition

- RF24_PA_MIN = 7 feet

- RF24_PA_LOW = 10 feet

- RF24_PA_HIGH = 17 feet

- RF24_PA_MAX = 25 feet --> we decided to use this for extra reliability

- NOTE - straight-line distance was measured in the hallway with no obstructions

- NOTE - these were found to be the values at which no packet-loss was detected for 25 consecutives transmissions

Sending Maze Information Between Arduinos

- Our software is structured such that a Maze object is stored on both the Robot and the Base Station

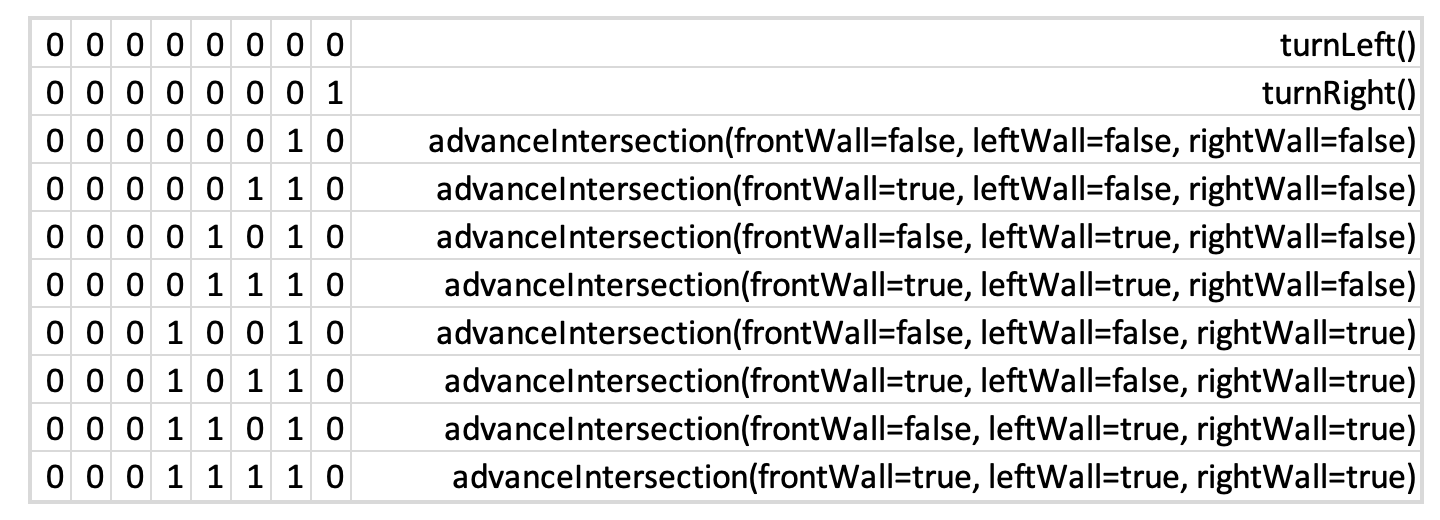

- The Maze object is updated using one of the following commands:

- turnLeft()

- turnRight()

- advanceIntersection()

- At a high-level, this process looks as follows

- Robot logic determines what movement to take and updates its Maze object

- Robot encodes the Maze update function using the chart below

- Robot transmits this instruction, in 1 byte, to the base station

- Base station receives the 1 byte instruction

- Base station decodes the instruction using the chart below

- Base station calls the Maze update method, which keeps the two Maze objects equivalent

- Due to the simplicity of this structure, we can encode all of the information in 1 byte

- Our receiver is set to send an Ack Payload confirming receipt of the instruction

- We intend on making this error checking more robust

Figure 1 - Byte encoding chart

Creating the Base Station

- Our base station Arduino is programmed with a different main sketch than our robot

- Although they share sub-function libraries (.cpp and .h files)

- Maze.h and Maze.cpp

- nordic_rf.h and nordic_rf.cpp

- Robot is hard-coded to perform Transmitter setup and only call Transmitter functions

- Base station is hard-coded to perform Receiver setup and only call Receiver functions

- The base station has a main loop that runs the following processes:

- Receive transmission from robot (1 byte)

- Decode the transmission using the table in Figure 1 (above)

- Run the required command to update the base station Maze object

- Transmit the updated information to the GUI

- Calls the command: getGUIMessage(byte x, byte y)

- x = current x location = getX()

- y = current y location = getY()

Base Station-to-GUI Transmission

- The getGUIMessage(byte x, byte y) function takes the x and y coordinates of the current robot position and prints out the correct GUI string across the serial connection to the PC.

- Correct GUI string is a comma separated string containing the row and column positions, and information of walls in the four possible positions surrounding the robot.

- Since the base station also contains an updated version of the Maze object and updates it everytime it receives a transmission from the robot, this function can just process the walls and robot position variables in the Maze object to retrieve correct information.

Robot Integration Group

GOAL: Robot-to-GUI integration, full exploration, and update the GUI

Materials Used

- Our robot

- All code from past labs

- IR Decoy

- 660 Hz tone generator

- Partner with another team to show robot avoidance

- Walls to make the maze set up (follow this layout

Maze layout for testing

Discuss Data Sotrage / Maze Object

- We defined a cpp class Maze to store our maze information. It has the following fields:

- byte pos: Indicates the robot position. The 4 MSBs make up the X coordinate of the robot, and the 4 LSBs make up the Y coordinate of the robot. Origin (0,0) is the top left corner of the grid.

- byte heading: Indicates the direction the robot is facing. 0 is North, 1 is East, 2 is South, and 3 is West.

- short visited[9]: Keeps track of which squares have been visited already on the grid. The array has 9 shorts (16-bit data) corresponding to rows on the grid. The 9 LSBs are of each short in the array represent the columns in that row. These bits are set to 1 if the corresponding (x,y) coordinate was visited, and 0 if not. For example visited[3] 5th LSB represents whether the coordinate (5,3) was visited.

- byte walls[18]: This is our most complicated encoding. There are a total of 18 grid lines (9 rows and 9 columns). Along each grid line, there can be a total of 8 walls (excluding the external bounding walls of the 9x9 grid). For example, if we follow the grid line that goes through the first row of the grid, there are 8 spots along that line where a wall could exist. We use this fact to encode our walls seen in a data structure with 0 wasted bits! For each grid line we have a byte, and each bit corresponds to one of the walls that could exist on that gridline. We set the bits to 1 if we see the corresponding walls, and 0 if those walls do not exist. This lets us store all of the walls seen in 18 bytes.

- As discussed earlier, the Maze object has a 3-function interface. This narrow interface makes it extremely easy to reason about updating the maze, and all our complicated bit-shifting and arithmetic is handled internally. Here is the interface:

- void turnLeft(): Updates the heading of the robot in the Maze object

- void turnRight(): Updates the heading of the robot in hte Maze object

- void advanceIntersection(bool frontWall, bool leftWall, bool rightWall): Updates the position of the robot in the maze object, and sets all of the wall bits in walls[18] based on the robot's new position and heading. This function does all of the hard work of shifting the appropriate bits and bit masking, etc.

Integrate Radio Communication into Software

- Since we had done such a good job integrating our systems for Milestone 2, minimal changes had to be made for Lab 3

- First, we integrated our new sub-function libraries

- Maze.h and Maze.cpp

- nordic_rf.h and nordic_rf.cpp

- As previously mentioned, these are the exact same files that the base station uses (modularity!)

- Second, we updated our main loop movement logic

- The code is identical to the code from Milestone 2, with the addition of just 5 new lines

// wall detected at intersection

if ((sensorStatus[0] == 0) && (sensorStatus[1] == 0) && (sensorStatus[2] == 0) && detect_wall_6in(3) ) {

if (detect_wall_6in(1)){

turnLeftIntersection(servo_L, servo_R);

send_advance_intersection(radio, true, false, true); //NEW LINE

send_turn_left(radio); //NEW LINE

}

else{

turnRightIntersection(servo_L, servo_R);

send_advance_intersection(radio, true, false, false); //NEW LINE

send_turn_right(radio); //NEW LINE

}

}

// wall detected but not at an intersection

else if (detect_wall_3in(3)) {

turnLeft(servo_L, servo_R);

send_turn_left(radio); //NEW LINE

}

// no wall detected --> just track the line

else if (sensorStatus[0] == 0) //turn Right

adjustRight(servo_L, servo_R, 85);

else if (sensorStatus[2] == 0) //turn Left

adjustLeft(servo_L, servo_R, 85);

else {

moveForward(servo_L, servo_R);

}

Full System Testing

This video demonstration shows our robot completing all required behaviors:

- Starts on a 660 Hz tone

- Navigates a small test maze autonomously (line tracking and wall detection)

- Sends maze information wirelessly to the base station

- Displays updates on the base station GUI

- Stops for enemy robots (IR Hat) and ignores Decoys

- We updated our IR detection system to increase range and accuracy

- To boost range beyond the 1 foot requirement, we added a second amplifier

- To better differentiate between Robots and Decoys, we use our FFT to check for both the 6.08 kHz IR value as well as its second harmonic frequency